Minimum viable products (MVPs) have evolved from a scrappy startup tactic to a mainstream product-development philosophy, now embraced by Fortune 500 companies and student founders alike. Over almost two decades, the concept has broadened, splintered, and adapted to new technologies—yet its core goal remains the same: learn as much as possible, as fast as possible, with the least possible waste.

In this post, we’ll trace that journey, see how each era reshaped the practice, and look ahead to where MVPs might go next.

What is an MVP?

An MVP is not simply the first release of a product. It is specifically engineered to answer a critical uncertainty. Think of it as an experimentally‑minded slice of your vision—just enough functionality that real users can interact with it and you can observe whether your riskiest assumptions hold water.

- Purpose‑built: Every MVP should revolve around one dominating question. For Uber’s founders, that question was “Will people request a ride from strangers with their phone?”

- Externally validated: Data must come from outside the building—clicks, sign‑ups, dollars spent, churn, qualitative interviews, or ethnographic observations.

- Imperfect by design: Quality is ‘good enough to learn.’ If you polish beyond that, you’re throwing time at the wrong goal.

Pro tip: Frame your riskiest assumption as a falsifiable hypothesis—e.g., “10% of landing‑page visitors will enter their email for early access.” Then build just what you need to test it.

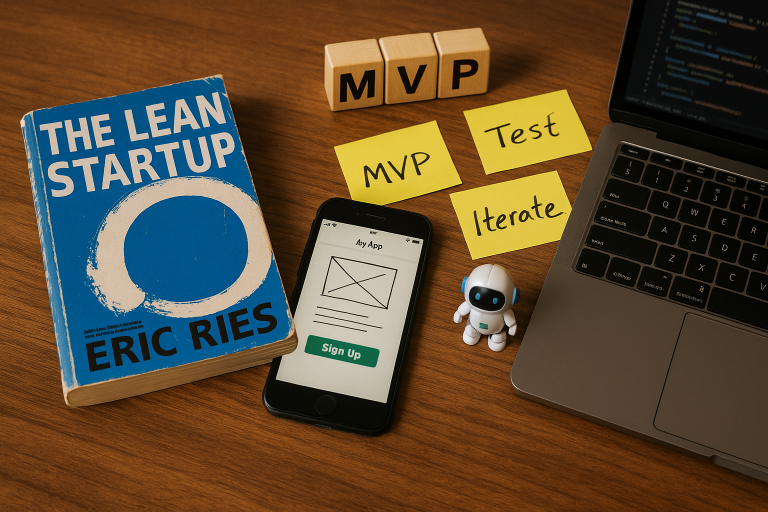

Roots in the lean startup movement

Steve Blank and customer development

In the early 2000s, serial entrepreneur Steve Blank noticed a pattern: tech startups failed not from poor engineering, but from building products nobody wanted. His solution was customer development, a four‑step process (customer discovery, customer validation, customer creation, company building) that required founders to test hypotheses directly with target users before scaling. Blank didn’t use the term MVP, but his insistence on “minimum feature set” prototypes laid the conceptual foundation.

“Get out of the building” became the rallying cry for a generation of founders who realized business plans were guesses until proven otherwise.

Eric Ries popularizes the MVP

Blank’s student Eric Ries synthesized agile engineering, Lean manufacturing, and customer development into what he dubbed The Lean Startup. On his blog (2008) and later in the best‑selling 2011 book, Ries formalized the build‑measure‑learn loop, with the MVP at its heart. He argued that each iteration should be viewed as a scientific experiment—a controlled way to maximize validated learning about customers with the minimal investment of effort.

Within a few years, accelerators like Y Combinator and media outlets like TechCrunch turned “launch an MVP” into the default advice for software founders.

MVPs in the mobile‑first 2010s

The iPhone’s App Store (2008) and Android Market (2008) supercharged lean experimentation:

- Tiny surface area, vast reach: A single‑screen app could reach millions of users. Instagram launched in 2010 with only three core actions—take photo, apply filter, share—and nevertheless onboarded 25,000 users in 24 hours. That validated the bet on mobile‑native photo sharing.

- Cloud plumbing: AWS, Heroku, and Stripe slashed backend and payment friction. Two engineers could now spin up infrastructure in an afternoon, shrinking the cost of failure.

- Playbook formation: The rise of Crashlytics, Mixpanel, and Optimizely made data collection trivial, further codifying the measure portion of the loop.

These forces cemented the cultural expectation that a respectable startup should launch an MVP within weeks, not months.

Design thinking meets MVPs

By the mid‑2010s, product teams began marrying design thinking—with its emphases on empathy, ideation, and rapid prototyping—to lean experimentation:

- Empathize and define: Qualitative interviews and field studies revealed pain points before a single line of code was written.

- Ideate broadly, prototype narrowly: Dozens of sketch concepts were distilled into a single hypothesis‑driven MVP.

- Test user flows in hours: Interactive Figma prototypes or high‑fidelity InVision click‑throughs often served as ‘MVPs’ when the question was purely about usability, not technical feasibility.

This merger shifted attention from can we build it? to should we build it?, elevating user desirability to the same tier as viability and feasibility.

No-code and low‑code: democratizing experimentation

Around 2017, no‑code and low‑code platforms reshaped who could build:

- Bubble & Webflow let non‑technical founders spin up full web apps or SaaS front‑ends without touching JavaScript.

- Airtable & Glide allowed operations teams to create internal tools that looked and felt custom.

- Zapier & Make stitched together payment, email, and data pipelines—so a weekend hacker could accept Stripe payments, store orders in Airtable, and send confirmation emails via SendGrid.

The upshot? Experiment volume exploded. Marketers, PMs, and even students could now run cheap tests, turning MVP culture from an engineering specialty into a company‑wide habit.

AI-powered MVPs: 2020s and beyond

The launch of GPT‑3 (2020) and subsequent commoditization of large‑language‑model APIs marked a step‑change:

- Conversational front‑ends: A simple prompt‑engineering script plus an LLM could impersonate a concierge service, letting founders gauge demand before writing domain‑specific logic.

- Code generation: GitHub Copilot and similar tools auto‑scaffold CRUD apps, slashing dev time from weeks to hours.

- Synthetic users: Agent‑based simulations now approximate real user journeys, letting teams stress‑test onboarding flows before live traffic.

AI thereby shortened the build‑measure cycle to the point where MVP and prototype sometimes merge—the test is built into the generative model itself.

common pitfalls and lessons learned

Even seasoned teams stumble. The most persistent traps include:

| Pitfall | Why it happens | How to avoid |

|---|---|---|

| Vanity mvps | Building something easy, not something risky | Articulate a singular risky assumption first and refuse features that don’t test it |

| Scope creep | Fear of negative feedback | Set an illness‑style timebox (e.g., ship in 2 weeks) and freeze scope mid‑sprint |

| Success theater | Cherry‑picking favorable metrics | Pre‑commit to a threshold metric and shut down if it isn’t met |

| Survivor bias | Copying mvp tactics from success stories without matching context | Examine why a tactic worked before porting it |

The future of MVPs

Looking forward, several threads are converging:

- Continuous experimentation: Feature flags and remote‑config frameworks (LaunchDarkly, GrowthBook) enable ‘MVPs’ that live inside production apps, shipping to 1% of users at a time.

- Regulated‑space sandboxes: Fintech, med‑tech, and ed‑tech companies are piloting compliance‑first MVPs inside isolated data enclaves, where real‑world constraints are simulated before live deployment.

- Autonomous product agents: Early research demos show AI agents that can propose hypotheses, spin up a micro‑front‑end, route traffic, and analyze results, cycling autonomously until a human intervenes.

The MVP may soon become a perpetual background process rather than a discrete pre‑launch phase.

Key takeaways

- An MVP is a means to validate learning, not a milestone shipment.

- Each era—lean startup, mobile‑first, design thinking, no‑code, and AI—has expanded who can experiment and accelerated iteration speed.

- The next frontier is continuous, autonomous MVPs embedded inside mature products, reducing the cycle time from weeks to minutes.

Bottom line: Whether you’re a solo hacker or a global enterprise, the surest way to de‑risk innovation remains the same—launch something small, measure everything, and let the data shape what comes next.