AI acceptance criteria become crucial once we’ve gathered user stories for the upcoming iteration and need to specify them further. In our previous article, we explored how to brainstorm AI user stories using the most popular product management tools. Now, let’s delve into the details and compare these tools to determine which one most effectively aids the acceptance criteria writing during the specification process.

How to evaluate AI acceptance criteria tools

To assess AI user story tools effectively, we’ll create a transparent environment for comparison.

The goal is to brainstorm and write user stories for a specific example product.

For simplicity and clarity, we’ll choose a product familiar to all product managers — a roadmap tool.

An online roadmap tool that allows product teams to visualize business releases and iterations on a timeline view. The target audience is product teams. Key features should be the following: Creating a roadmap, Roadmap customization, Inviting team members, and Sharing the roadmap with external clients.

We’ll use this input in the compared tools.

Tools we compare:

Storiesonboard AI

Miro AI Assist

ClickUp AI

Airfocus AI Assist

Acceptance criteria definition

Before proceeding to the tool comparison, let’s review what we know about acceptance criteria and why they are important.

Acceptance criteria in agile software development are conditions that a software feature must meet to be considered complete. These criteria are important because they ensure everyone involved in the project understands what needs to be done and what the final product should look like.

This clear understanding helps prevent misunderstandings and ensures that the final software works well for the users. The team writes these criteria before they start working on the feature, and they use them to check their work before they say a feature is finished. This way, they can be sure that the software does exactly what it is supposed to do.

Why do acceptance criteria play a crucial role in agile software development?

- Clarifies Requirements: Acceptance criteria provide a detailed explanation of what the feature needs to accomplish, helping to define the scope clearly.

- Improves Communication: They ensure that all team members and stakeholders consistently understand the project goals and what is expected.

- Guides Testing: Acceptance criteria form the basis for creating test cases, ensuring the feature functions as intended.

- Prevents Scope Creep: They define what is out of scope, helping to focus on necessary features and avoid unnecessary work.

We can write acceptance criteria in different ways; here are some of them:

- User story format:

Given [initial context], when [event occurs], then [ensure some outcome].

- Scenario-Oriented:

Scenario 1: [Scenario Description]- Given [initial context],

- When [action taken],

- Then [expected result].

- Rule-Oriented:

Rules:- The system must [requirement 1].

- The system should [requirement 2].

- The system will [requirement 3].

AI acceptance criteria writing

Now, using the product scope described above, let’s compare different AI product management tools to see which one offers the most support for writing specifications.

StoriesOnBoard AI

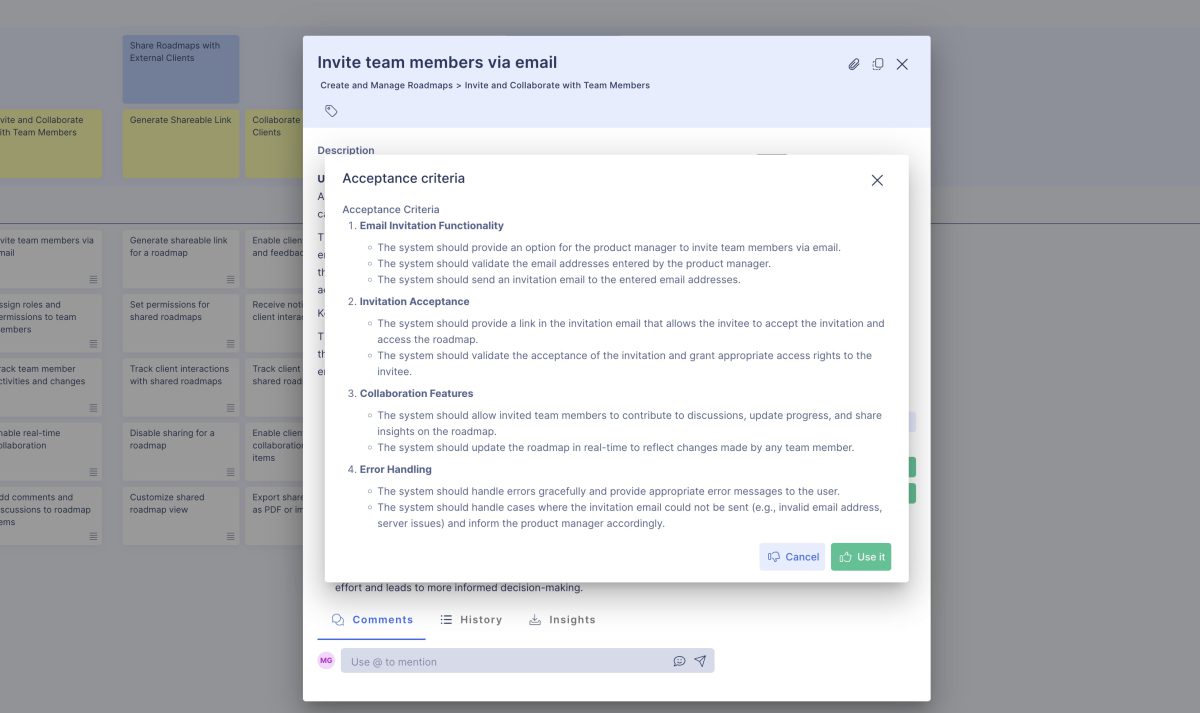

Whether you’ve read the previous article or not, you’re likely familiar with StoriesOnBoard. It’s a well-known user story mapping tool that helps product teams create visual backlogs, known as user story maps. Unlike traditional flat backlogs, StoriesOnBoard organizes user stories into user goals (epics) and user steps (related to the user journey). We’ve already created the backlog, so the next step is to add a feature overview to the story card and use AI to generate the acceptance criteria.

Although StoriesOnBoard provides pre-made and customizable templates for writing AI acceptance criteria, we began with the default settings. The product information had been uploaded beforehand, and the feature overview was already included in the user story description.

The AI provided a dozen acceptance criteria that were organized into groups. On average, the number of acceptance criteria is similar to what other tools offer. However, the grouping feature could inspire us to focus more intently on specific topics and write additional criteria. Once you add the criteria, you can press the “Collect Acceptance Criteria” button to generate more items within the existing groups or even see a new group appear. As you can notice, the criteria are formatted clearly, making them easy to find in a lengthy description and simple to scan visually.

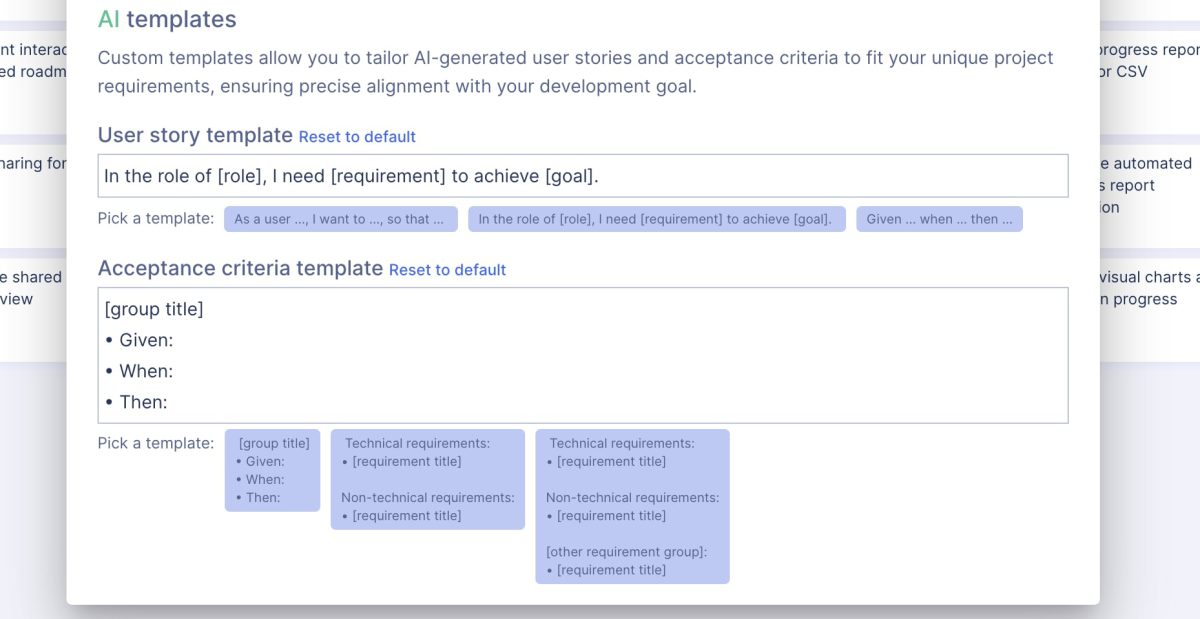

Different teams have different habits and needs, especially regarding the acceptance criteria format. To maintain a smooth delivery process, you can customize the user story and acceptance criteria templates. Additionally, the most popular methods are available as pre-made templates, which you can select with just a single click.

If you prefer a different method, simply use your best approach to list acceptance criteria, conduct a few tests, and refine the template to enhance the output format. Each time you press the “Collect Acceptance Criteria” button, the StoriesOnBoard AI will respond in the customized format you’ve set.

What makes StoriesOnBoard AI stand out compared to other tools is its efficiency—you don’t need to input everything from A to Z to achieve consistent and accurate results across user stories. StoriesOnBoard AI not only reads the user story description and the card title but also grasps the essence of the product. Moreover, each time you make a request, the AI considers the epic, the user step, and other user stories within the epic, ensuring comprehensive understanding and relevance.

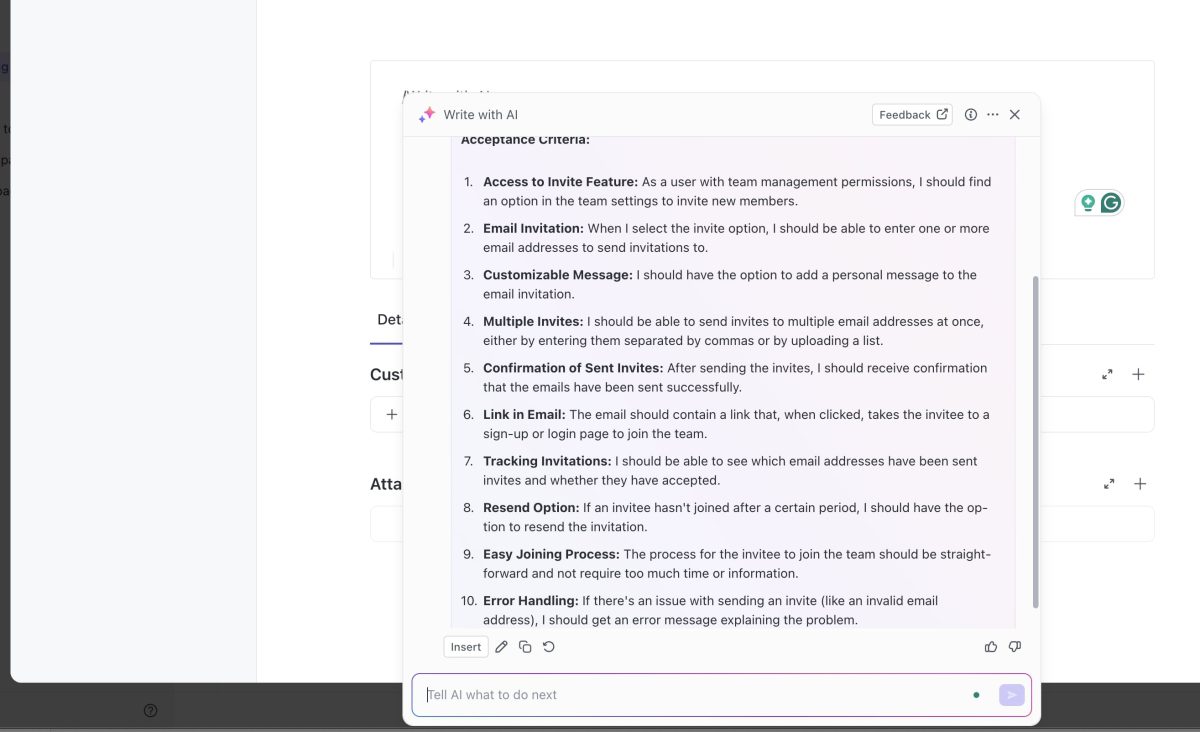

Miro

As mentioned in the previous article, Miro offers an easy-to-use AI chat widget for writing AI user stories. This is a great starting point for discussing backlog items with the Assistant, and the brainstormed items can easily be added to the board with just a few clicks.

To add more details to user stories in Miro, first, change the sticky notes type to a card. This change unlocks the “backside” of the card, allowing you to add extensive details.

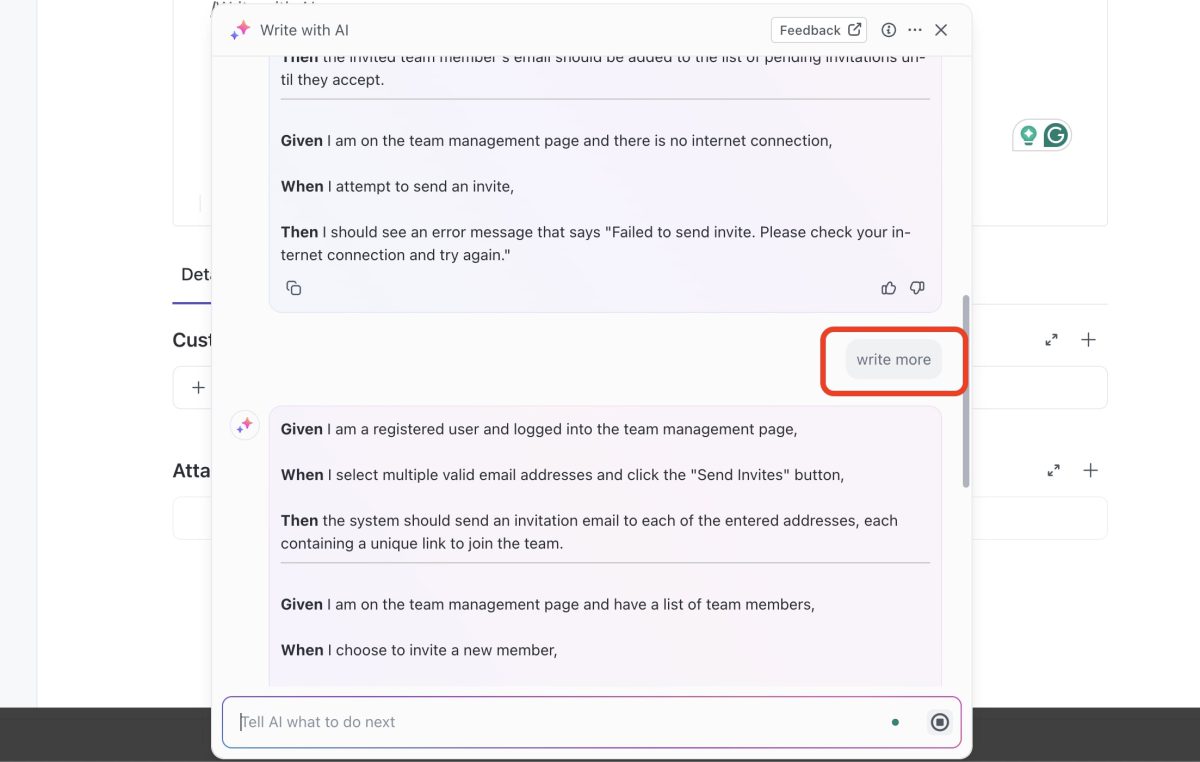

To write acceptance criteria, you don’t need to use the chat widget. Miro has a dedicated function for this—simply select the card and click the AI icon on the toolbar. Acceptance criteria are added immediately. However, this feature has some drawbacks: it generates 10 criteria in a strict format and overwrites any existing content on the card. This doesn’t allow for customization or extension of the results, but it can serve as a good starting point for specifying a backlog item.

If you want to preserve existing content on the card while adding acceptance criteria, you need to revert to using the AI chat widget. Ask the Miro Assistant to gather information directly into the chat window, then manually copy and paste it into the card details. Unfortunately, this process isn’t as seamless as it could be.

Additionally, the Assistant can sometimes behave unpredictably. Although it recognizes the selected item—eliminating the need to enter the item title manually—it might generate an unexpectedly brief list of acceptance criteria, sometimes as little as a single item.

Miro is a solid choice if you’re looking to start a project quickly, brainstorm several user stories around a project or feature, and add a few initial acceptance criteria. Using the Miro Assistant is straightforward, but for more detailed and custom-tailored acceptance criteria formats, you might need to choose or integrate another tool.

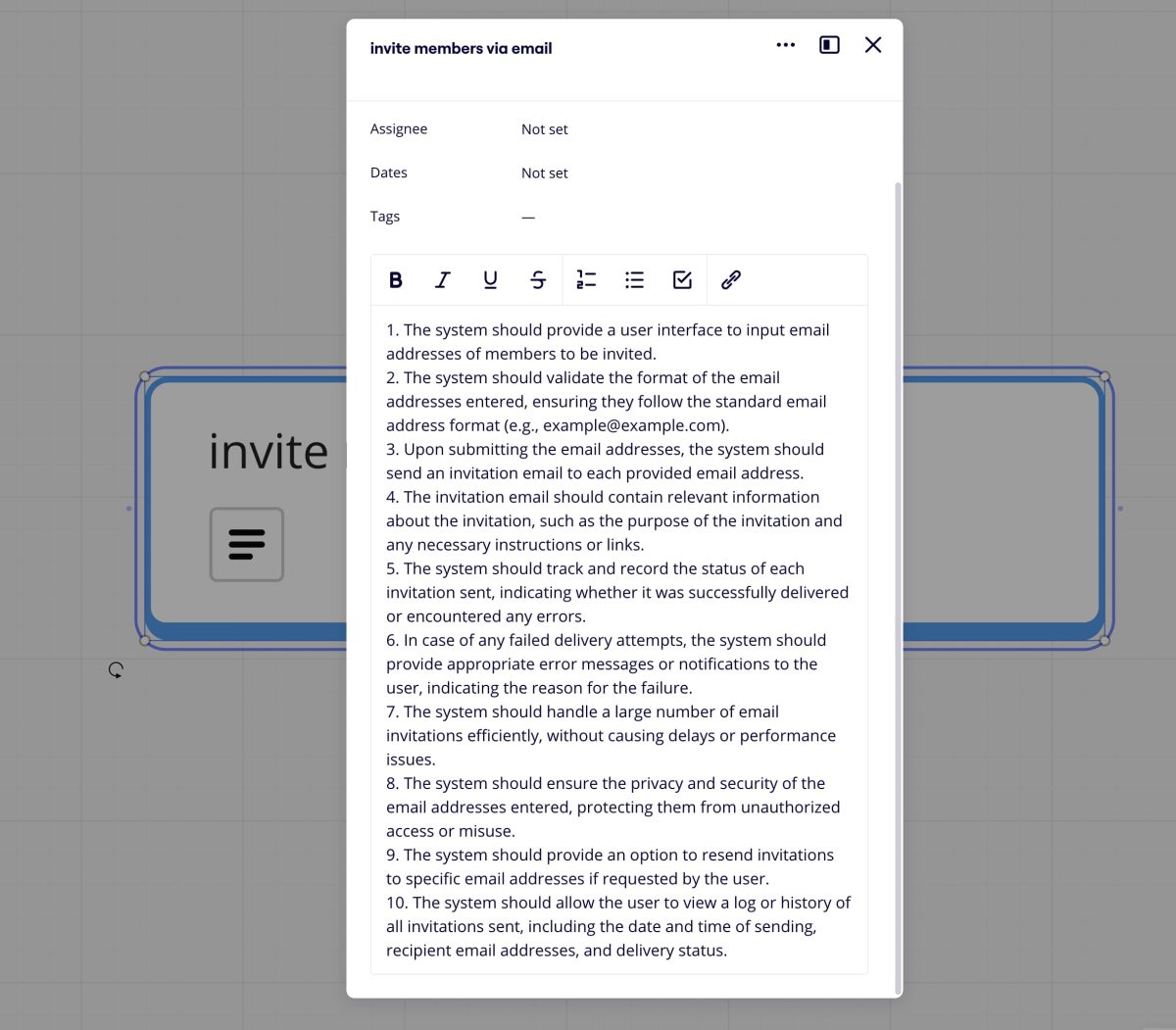

Airfocus AI

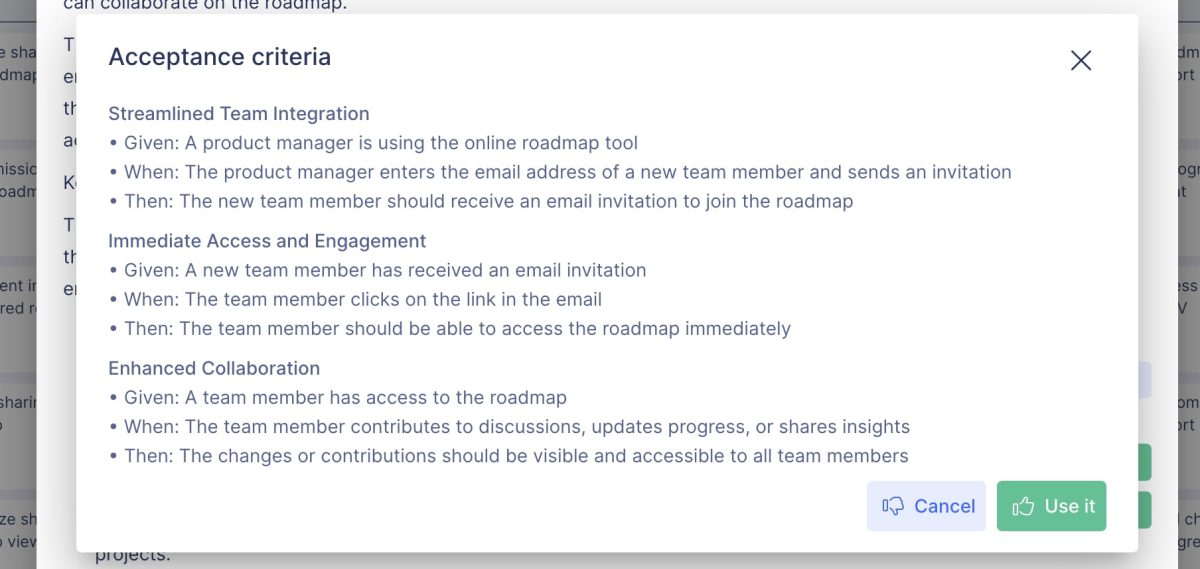

Once you have added backlog items into Airfocus, you can activate the AI function simply by typing “/” anywhere in the product description. A major advantage is that the AI understands the context from the existing card details, so there’s no need to input everything from scratch. However, Airfocus lacks a dedicated repository for adding product-level information, so we included the previously highlighted product information in each card. This workaround isn’t ideal for real-life applications, but it was necessary to maintain fairness in our comparison.

A significant benefit of using Airfocus AI is that when you ask it to write a user story, it not only complies but also adds a plethora of details, including AI acceptance criteria, in a fixed format.

If you only need acceptance criteria, you can create a custom request using the chat widget. Simply input your specifications, and once the AI generates the acceptance criteria, you can easily add the results to the card description.

The results from the Airfocus chat widget are partially formatted; while group items receive numbering, headings are not included. Like other AI functions in Airfocus, all AI commands process the card description. If you need to collect additional items, you can re-enter the prompt without worrying about duplicated AI acceptance criteria.

The built-in function in Airfocus always delivers acceptance criteria in the same format. If you prefer a different format, such as the given-when-then style, or if you want to organize criteria into technical and non-technical groups, you’ll need to manually type in your specifications each time you want to add AI acceptance criteria.

The overall experience with Airfocus is positive, particularly if the premade template meets your needs. It only takes a single command to collect all necessary information for the card description. However, a notable drawback is the need to enter the card title manually each time.

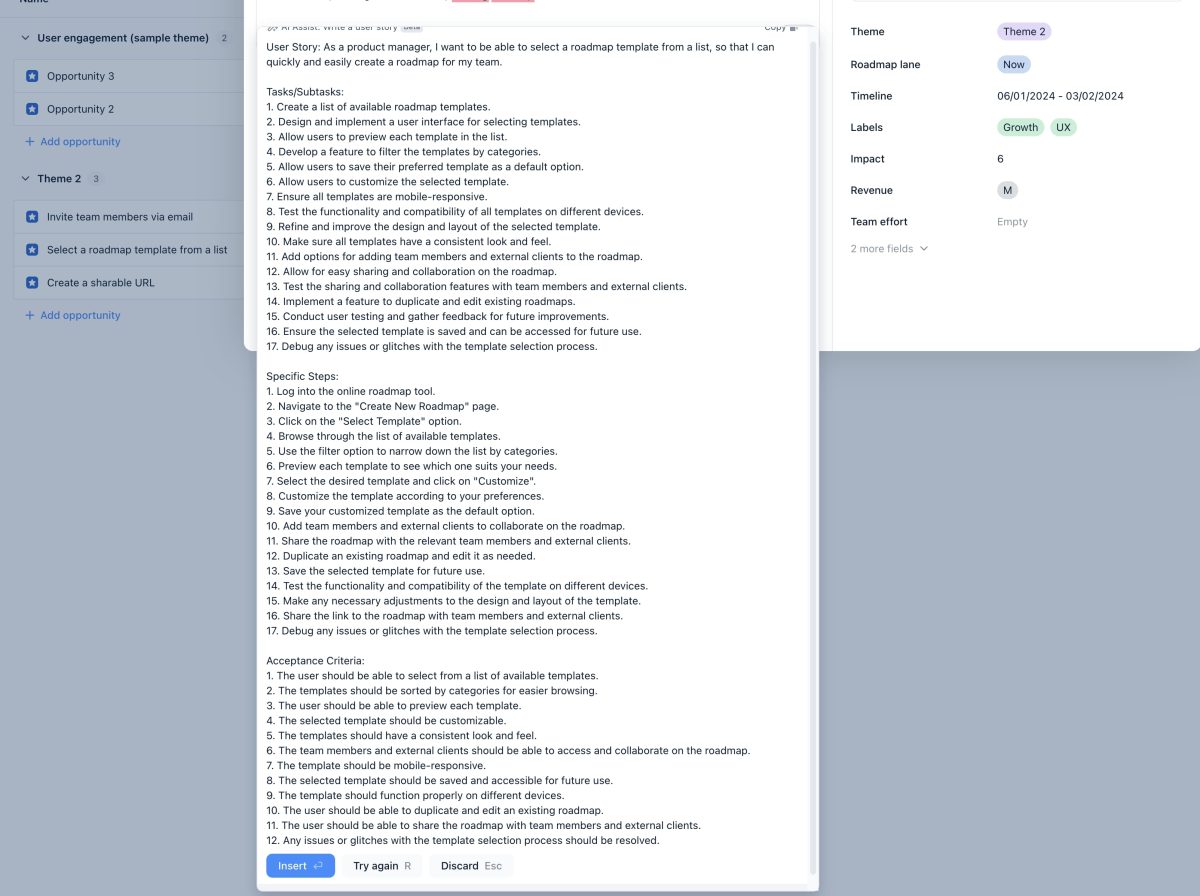

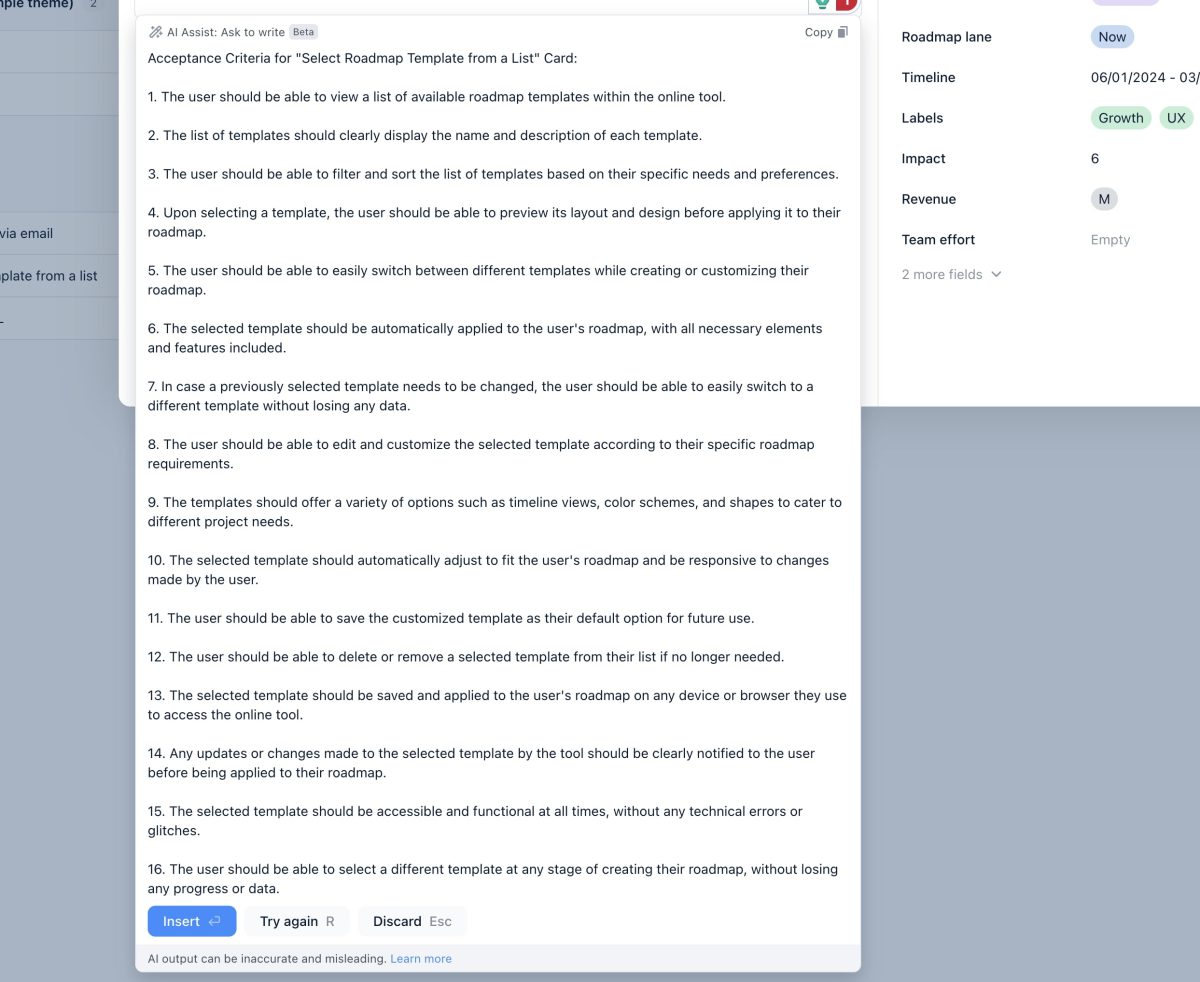

ClickUp AI

Like in our previous discussion, ClickUp cannot automatically read the card title or description—it functions similarly to chatting with OpenAI’s ChatGPT. This means that you must manually enter the product description, the backlog item (card) title, and any previously added feature overview into the chat widget each time you need to generate acceptance criteria or other details.

ClickUp’s AI output results are similar to those from the ChatGPT app. However, a notable advantage over tools like Airfocus AI or Miro Assistant is the superior output formatting. ClickUp AI frequently uses headings and various formatting styles to enhance the readability and organization of the results.

A useful feature worth highlighting is ClickUp’s ability to continue adding AI acceptance criteria seamlessly. Once you get the initial result you were expecting, you can insert the text and request more acceptance criteria. The additional AI acceptance criteria will match the formatting style of the initial items, and the system is designed to prevent the duplication of AI-generated acceptance criteria.

The quality of acceptance criteria generated is consistent with other tools and largely depends on the input provided when requesting AI acceptance criteria. Providing detailed content and specifying a desired output format can produce more satisfactory results.

Unfortunately, there’s no feature to save canned prompts in ClickUp like AI acceptance criteria writing, which limits the ease of reusing effective queries. The only workaround is to save useful prompts in a separate document and copy-paste them each time they are needed. This approach is cumbersome and detracts from the tool’s efficiency.

The overall experience with ClickUp’s AI acceptance criteria writing features is less favorable compared to other tools for several reasons:

- The AI Assistant cannot access existing content within a backlog item where the prompt is initiated.

- There is no built-in function specifically for writing acceptance criteria.

- The tool requires significant manual input, which does not effectively save time.

- There are no options to customize the results to better fit specific needs or preferences.

Summary

Writing AI acceptance criteria can significantly accelerate the specification process. AI-powered tools can generate robust acceptance criteria, making it easier to expand your list. While product management tools using OpenAI deliver nearly identical quality in acceptance criteria, differences in user experience and time-saving capabilities become apparent depending on the project’s complexity.

Miro is useful for kickstarting a project or for quickly exploring a specific problem or feature—collecting user stories and adding the first AI acceptance criteria is straightforward. However, if you require more detail and a deeper understanding of your product, you’ll find yourself limited by the available functionality. StoriesOnBoard AI, Airfocus AI, and ClickUp AI offer multiple approaches to extend your specifications with AI acceptance criteria, but only StoriesOnBoard AI can significantly save time with its customizable templates and understanding of the product/user story context. The other tools, however, require a lot of manual typing work to deliver the proper—and sometimes inconsistent—results.